Bofei Zhang (张博飞)

Email: zhangbofei5675[at]outlook[dot]com

Experience

Career

- 2025/7-Current; Researcher @ Tiktok AIIC(AI创新中心)

- Working on SFT & RL for GUI Agent

- 2023/3-2025/7; Research Engineer @ Beijing Institute for General Artificial Intelligence (BIGAI)

- Worked on Vision Language Model & Agentic Task Post-Training.

- 2020/6-2023/3; Software Engineer @ ByteDance

- Tiktok & Lark

🚀 Referral(内推) Available — Details here →

Education

- 2018/9-2020/6; Master in Data Science @ New York University

- 2013/8-2018/5; Bachelor in Biomedical Engineering @ The Ohio State University

News

| Nov 13, 2025 | TongUI: Building Generalized GUI Agents by Learning from Multimodal Web Tutorials is accepted by AAAI 2026! 🎉; Checkout detail for this project here!     |

|---|---|

| Oct 03, 2025 | New preprint: Efficient Multi-turn RL for GUI Agents via Decoupled Training and Adaptive Data Curation (DART-GUI).

If you find this research helpful, consider starring the GitHub repo and upvoting the HF paper page. |

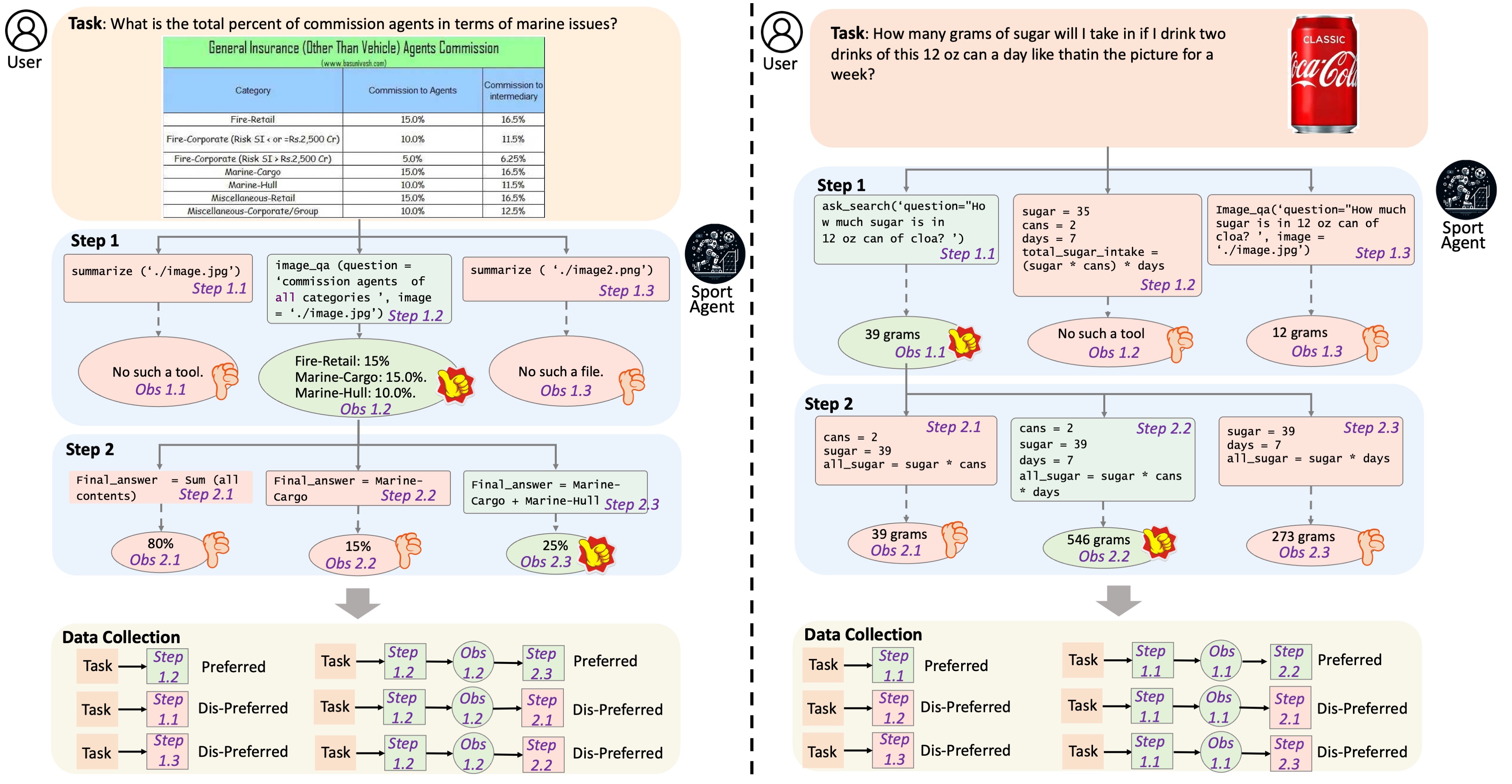

| Sep 26, 2025 | Our paper “Iterative Tool Usage Exploration for Multimodal Agents via Step-wise Preference Tuning” has been accepted by NeurIPS 2025! 🎉 |

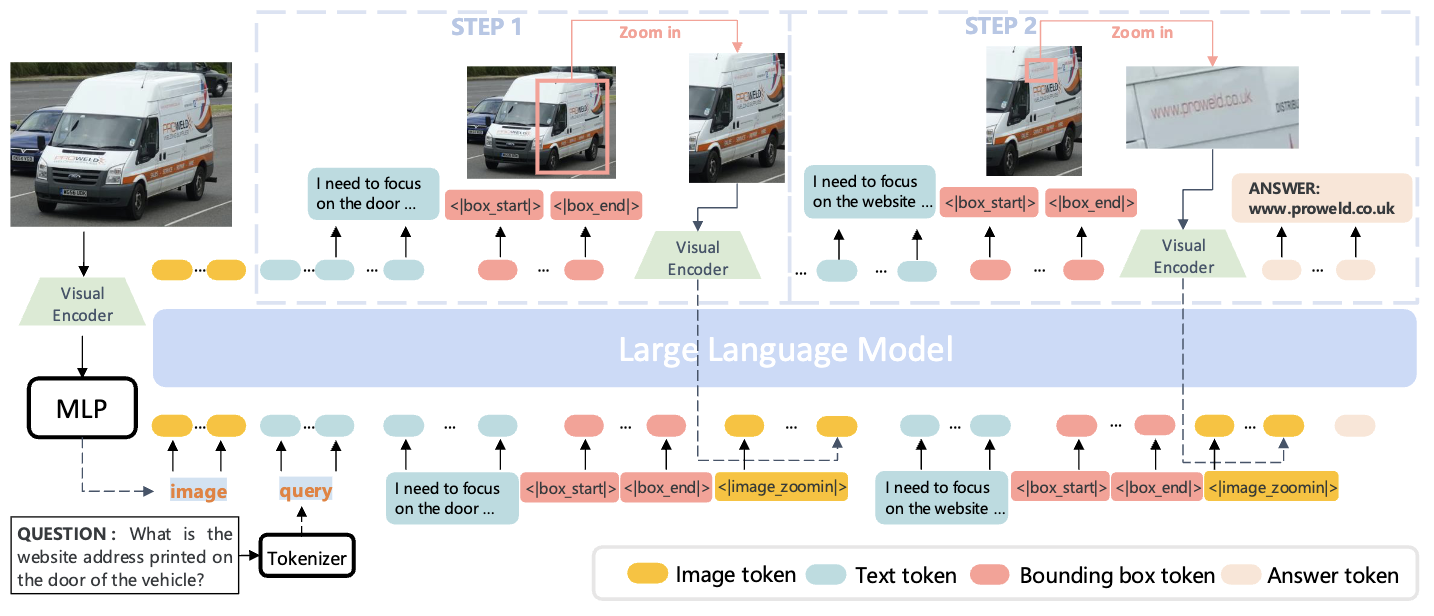

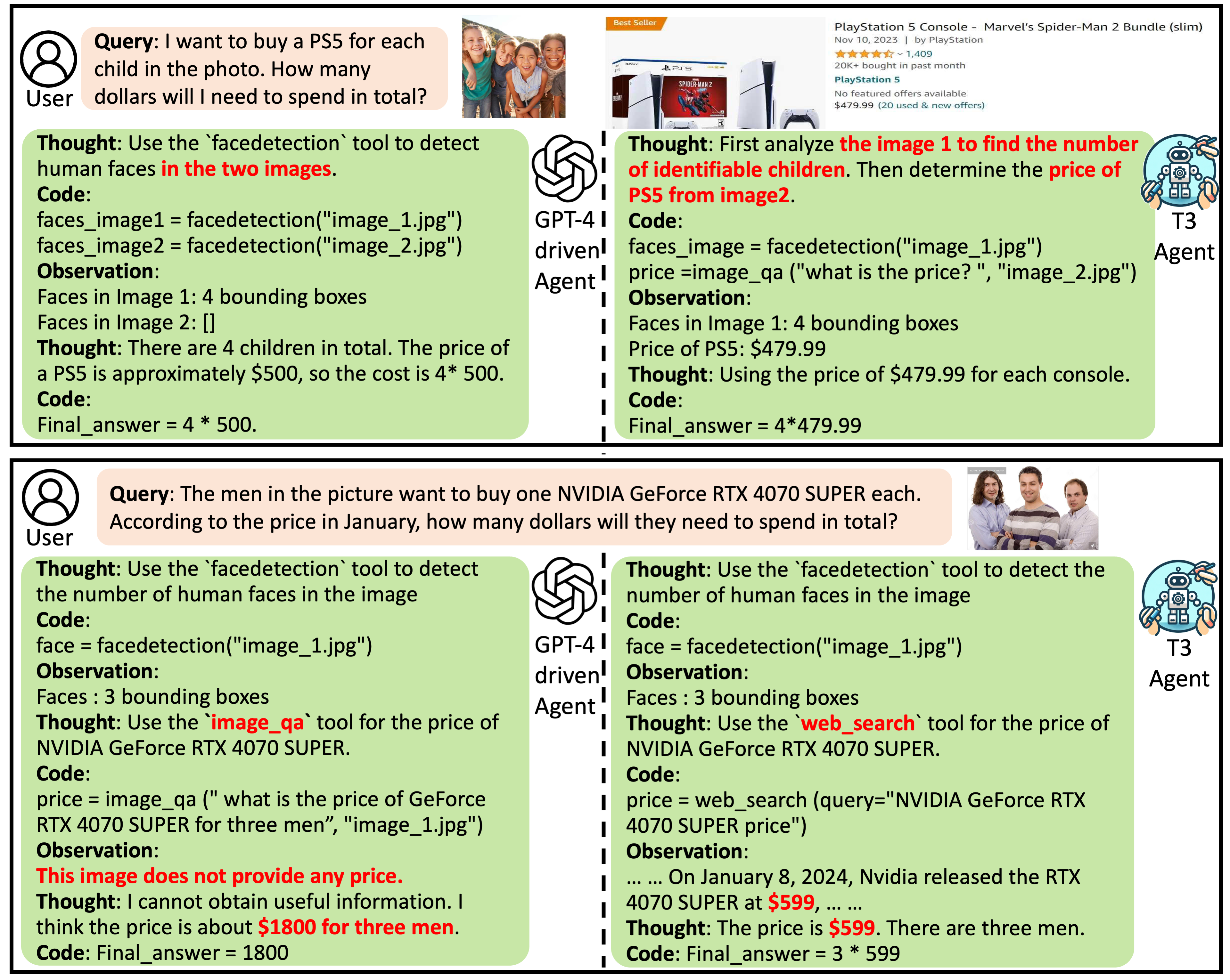

| Feb 07, 2025 | Multi-modal Agent Tuning (MAT): A framework for auto-generating multimodal tool-usage trajectories (20K MM-Traj), boosting MiniCPM & Qwen-VL tool use by 20%. This work is accepted by ICLR 2025! |

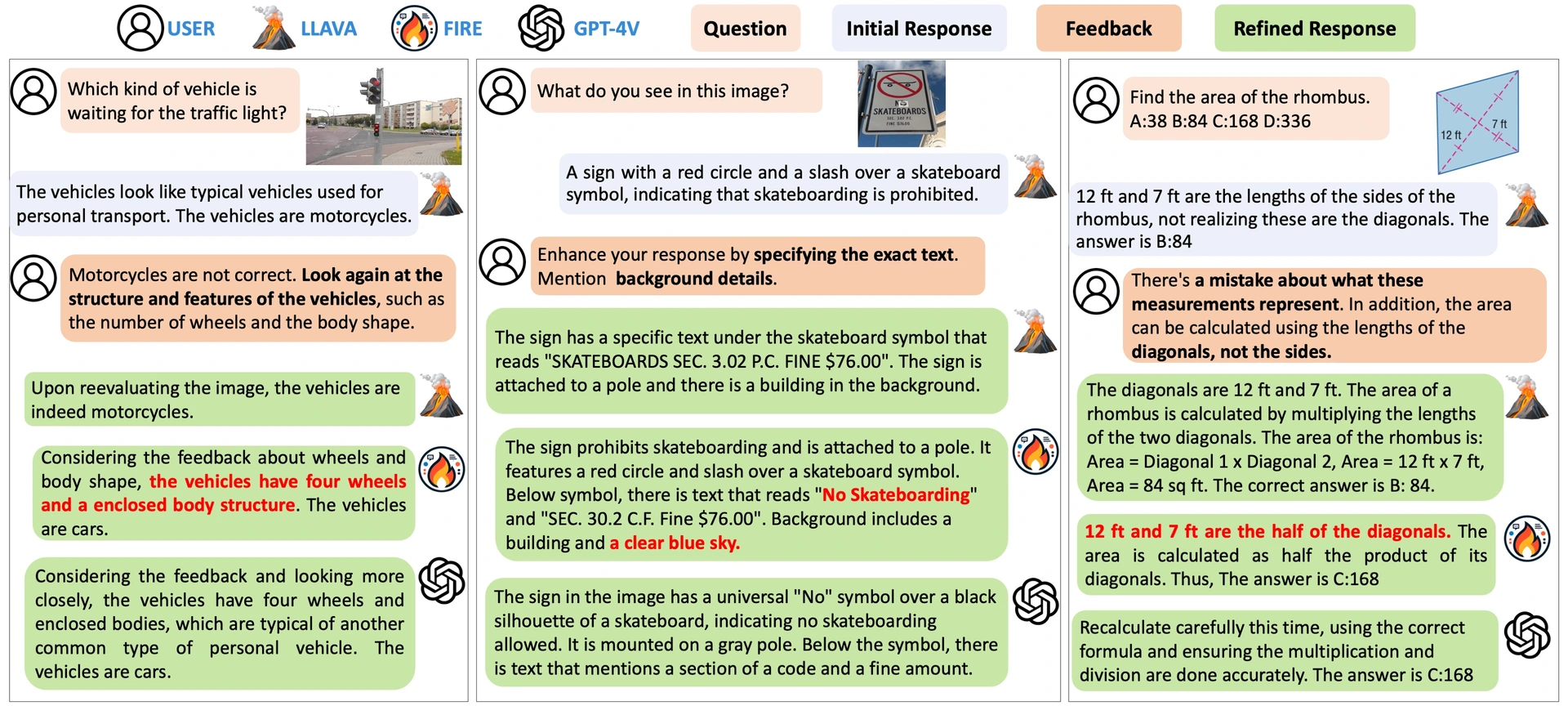

| Aug 02, 2024 | Introducing 🔥FIRE: A Dataset for Feedback Integration and Refinement Evaluation of Multimodal Models. Checkout Here for more details! 🔥FIRE are accepted by NeurIPS 2024! |

Latest Posts

| Aug 08, 2025 | ByteDance Referral |

|---|---|

| Jun 14, 2025 | Computer-Use MacOS Agent |

| Feb 07, 2025 | Tutorial of training Multi-modal Agent Tuning projects with LLaMA-Factory |

Selected Publications

* Equal contribution, ✉ Corresponding author